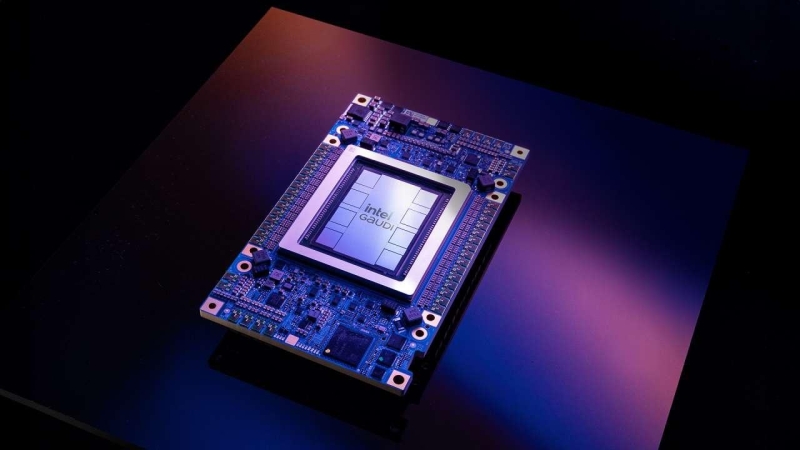

Intel Gaudi 3

Intel Corporation

In a relocation that straight challenges Nvidia in the rewarding AI training and reasoning markets, Intel revealed its long-anticipated brand-new Intel Gaudi 3 AI accelerator at its Intel Vision occasion.

The brand-new accelerator provides considerable enhancements over the previous generation Gaudi 3 processor, guaranteeing to bring brand-new competitiveness to training and reasoning for LLMs and multimodal designs.

Gaudi 3

Gaudi 3 considerably increases AI calculate abilities, providing considerable enhancements over Gaudi 2 and rivals, especially in processing BF16 information types, which are vital for AI work.

Produced utilizing a 5nm procedure innovation, Gaudi 3 integrates considerable architectural improvements, consisting of more TPCs and MMEs. This offers the computing power needed for the parallel processing of AI operations, substantially minimizing training and reasoning times for complicated AI designs.

Gaudi 3 broadens its hardware abilities with more Matrix Math Engines and Tensor Cores than its predecessor, Gaudi 2. Particularly, it increases from 2 to 4 MMEs and 24 to 32 TPCs, strengthening its processing power for AI work.

The brand-new accelerator boasts an FP8 accuracy throughput of 1835 TFLOPS, doubling the efficiency of Gaudi 2. It likewise substantially boosts BF16 efficiency, although particular throughput figures for this enhancement were not divulged.

It has 128GB of HBMe2 memory, providing 3.7 TB/s of memory bandwidth and 96MB of onboard fixed RAM. This enormous memory capability and bandwidth supports processing big datasets effectively, which is essential for training and running big AI designs.

High-speed, low-latency networking is vital when developing clusters of accelerators to resolve big training jobs. While Nvidia is developing its accelerators utilizing exclusive interconnects like its NVLInk, Intel is all-in on basic ethernet-based networking.

Gaudi 3 shows this, including twenty-four 200Gb Ethernet ports, considerably improving its networking abilities. This makes sure scalable and versatile system connection, enabling the effective scaling of AI calculate clusters without being locked into exclusive networking innovations.

Efficiency

Intel’s Gaudi 3 AI accelerator reveals robust efficiency enhancements throughout a number of essential locations pertinent to AI training and reasoning jobs, especially for LLMs and multimodal designs.

Intel jobs that Gaudi 3 will substantially exceed contending items like Nvidia’s H100 and H200 in training speed, reasoning throughput, and power effectiveness for numerous parameterized designs.

Intel likewise forecasts Gaudi 3 will provide a typical 50% faster training time and remarkable reasoning throughput and power effectiveness versus leading rivals for a number of parameterized designs. This consists of a higher reasoning efficiency benefit on longer input and output series.

Expert’s Take

Intel’s Gaudi 3 AI accelerator is a tactical relocation by Intel to acquire a higher position in the supply-hungry AI accelerator market, straight challenging Nvidia to resolve the blossoming need for sophisticated AI calculate options.

Intel constructed an engaging option, bringing significant efficiency enhancements over Gaudi 2 and providing a service that will challenge the marketplace. The 4x AI calculate for BF16, 1.5 x boost in memory bandwidth,